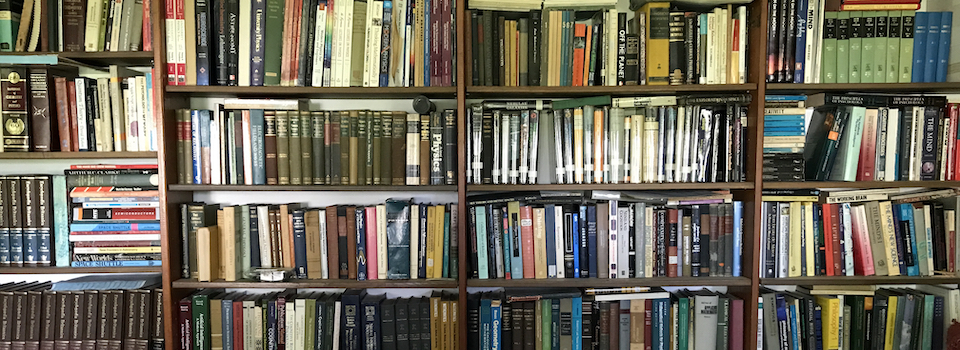

Here is some circumstantial evidence that I love what I do. Some pictures of my office in the Physics Department at Wells College, after I had crammed half my library into it, a few years back...

Here is some circumstantial evidence that I love what I do. Some pictures of my office in the Physics Department at Wells College, after I had crammed half my library into it, a few years back...